I was just wondering how does ChatGPT sometimes come up with information totally made up.

In this case on well documented SQL server DMV, which is documented and there must be more correct than incorrect information on the internet – used for the learning.

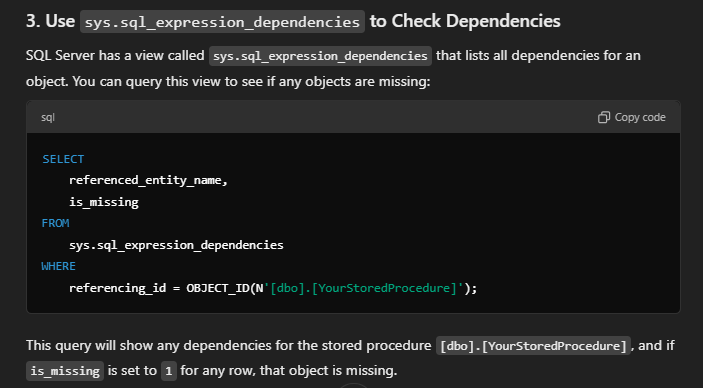

in this case this was the incorrect information I was given

sys.sql_expression_depencies DOES NOT have “is_missing” column obviously, would be cool if it did, but it doesn’t.

Documentation here;

Don’t get me wrong, I love ChatGPT and AI tools as little helpers, but makes me wonder how many people do trust it entirely and do copy paste type of work with that.

Leave a Reply